Meta’s introduction of Llama Stack at the Meta Connect developer conference marks a significant shift towards simplifying AI deployment for businesses.

The complexity of integrating AI systems into existing IT frameworks has been a major hurdle for companies looking to adopt AI technology. This challenge often requires significant in-house expertise and resources, making it difficult for many businesses to take advantage of AI’s benefits.

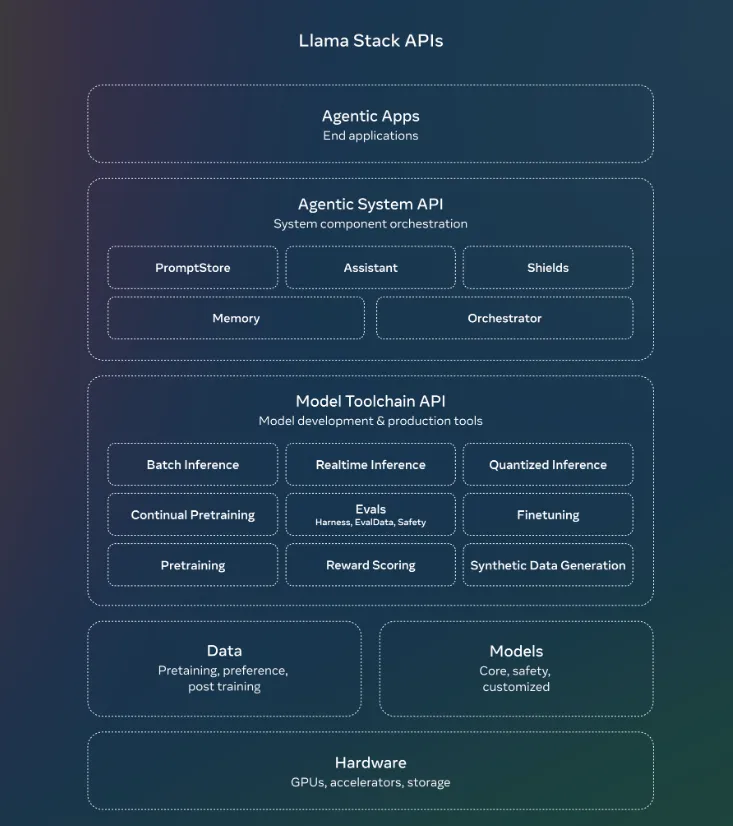

Llama Stack addresses these issues by offering a standardized API for model customization and deployment across various computing environments. This approach reduces the need for specialized knowledge and resources, enabling organizations to implement AI solutions more easily. By streamlining the process of fine-tuning models, generating synthetic data, and building applications, Llama Stack serves as a one-stop solution for businesses aiming to incorporate AI into their operations without the usual hassle.

Furthermore, Meta’s partnerships with leading cloud providers and technology firms ensure that Llama Stack is widely accessible. This availability supports a range of deployment options, from on-premises data centers to public clouds, catering to businesses with diverse IT strategies. The initiative breaks down the barriers to AI adoption and provides a flexible foundation for developing sophisticated AI strategies that balance performance, cost, and data privacy. This move by Meta could accelerate the widespread use of AI across industries, offering businesses the opportunity to innovate and compete more effectively regardless of size.

Why Should You Care?

The launch of Meta’s Llama Stack distributions simplifies AI deployment for businesses:

– Simplifying AI integration into existing IT infrastructures.

– Turnkey solution for organizations without extensive in-house AI expertise.

– Standardized API for model customization and deployment.

– Partnerships with major cloud providers offer flexibility in AI workloads.

– Addresses technical complexities of deploying large language models.

– Offers powerful cloud-based models and lightweight versions suitable for edge devices.

– Opens doors for innovation and competition among businesses of all sizes.